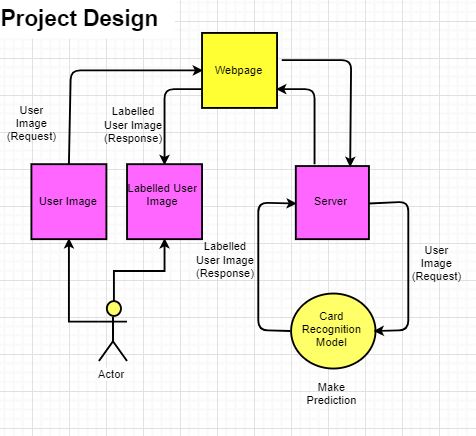

Project Design

Overview

Our project involves creating a dataset of card images and labeling them in order to train a neural network to identify playing cards from a standard deck. The cards that will be used for this project will be the 24 cards involved in playing a game of Euchre, which includes all cards valued from 9 to A. This allows for a complete set of cards and allows the group to create a web application to interface with the model. Once the model has been developed to accurately predict any of the 24 possible cards in a Euchre deck, it will then be deployed onto our Web application. A server will host the model and handle requests to identify cards within an image.

Environments and Languages

The following tools and environments will be used to develop the neural network:

- Linux/Unix OS, Mac OSX

- TensorFlow: Google's open-source machine learning framework

- labelImg: An open-source image annotation tool

- Google Cloud Platform: Google's suite of cloud based computing services

- Python 2.7/3.6: The preferred programming language for working with TensorFlow

- PyScripter: A user-friendly, interactive Python IDE

- Internal tools: It is very likely that we will find it helpful to build some of our own tools (for example, a script to rotate images in order to create more test images from a single picture). These will be added as we develop them. Stretch Goal 3 may be another tool that we find useful. (Typically developed in Python)

The following tools will be used to develop the web application:

- Linux/Unix OS, Mac OSX, Windows 8.1/10

- Python 2.7/3.6: The preferred programming language for working with TensorFlow

- PyScripter: A user-friendly, interactive Python IDE

- Django: A Python framework used for developing server-side software

- HTML, CSS

- JavaScript: A versatile, interactive web programming language

Module Description and Data Flow

This image is the design for how the model is trained. The Google Cloud Platform is leveraged to make use of their powerful computer hardware instead of commercial hardware, which pales in comparison to the computing power of these GPUs. Using their Machine Learning Engine, a card recognition model is developed to gain the ability to determine the cards provided in an image.

This image is the web application design for the user interface. They would select an image from file explorer and select "send" to send the image to the server, which hosts the model, in order to evaluate what cards are in the hand.

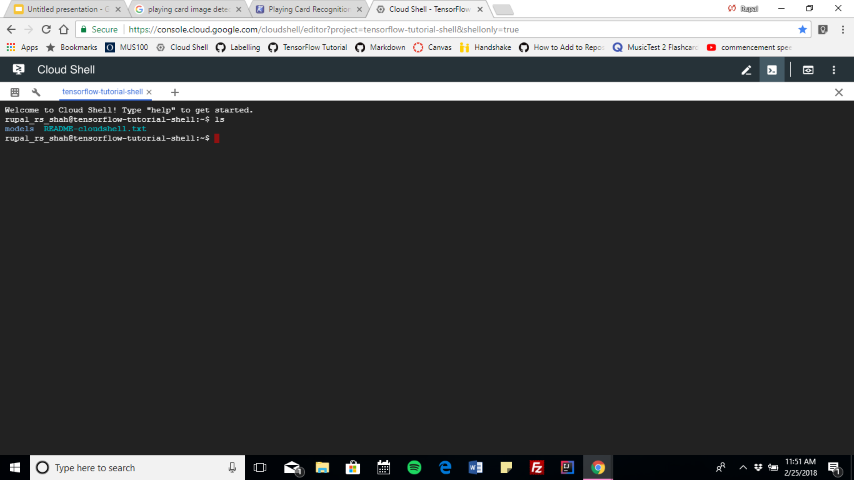

Model Development Interface

Training the model was usually performed from this screen: The Google Cloud Platform’s shell, which hosted a Linux environment and proved especially useful for those with a Windows based PC.

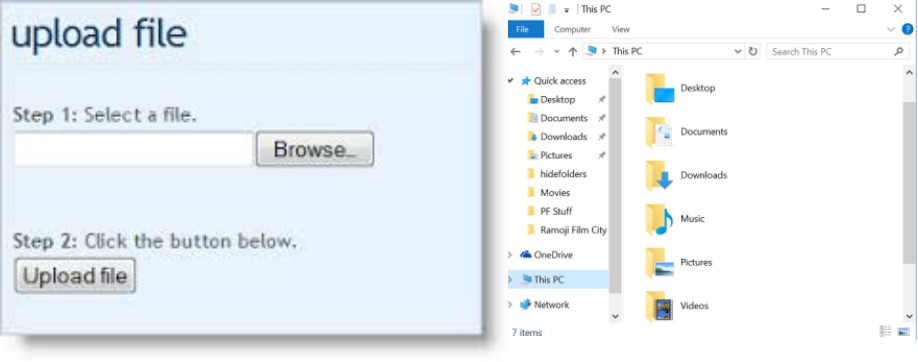

User Interface Design

First, the user will be able to go to the application web page and use this simple UI to upload a photo to the server.

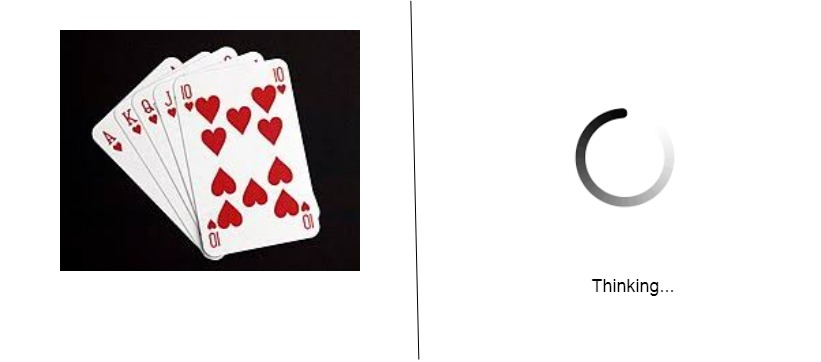

Once clicking “upload photo”, the user will then be shown a divided page: one side will hold their image along with the other side showing a loading screen.

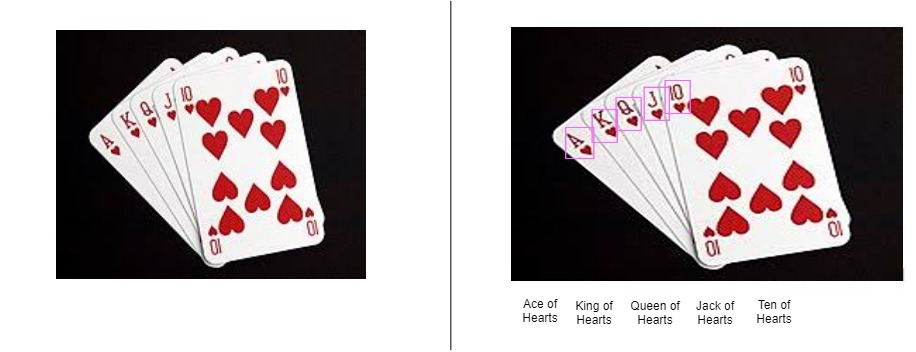

After the server has received the image, used the model to predict the cards held in the image, and returned the resulting image and labels, the user will be shown a screen like this:

User Scenarios

- The user wants to see a single card identified by the model. So, they take an image from their webcam of a card they are interested in, click on the browse button where their photos are stored, select the photo of the card, and click the upload photo button. Next, the app displays the user's image to them and lets the user know that it is attempting to label the card image. Then, the application sends the photo to the server, which labels the image, and sends it back to the user along with the card labels for those it recognized.

- The user wants to see several cards identified by the model. So, they take an image from their webcam that contains multiple cards they are interested in, click on the browse button where their photos are stored, select the photo of the cards, and click the upload photo button. Next, the app displays the user's image to them and lets the user know that it is attempting to label the card image. Then, the application sends the photo to the server, which labels the various cards in the image, and sends it back to the user along with the card labels for those it recognized.